What are Load Balancers?

What is Load Balancing?

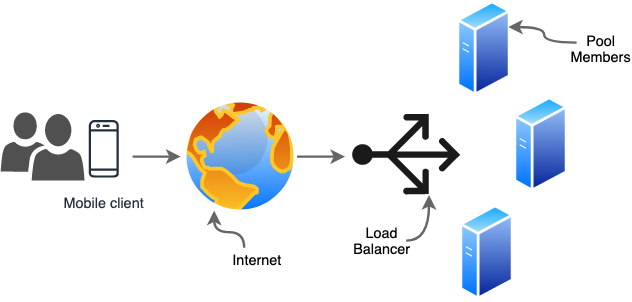

Load Balancing refers to efficiently distributing incoming traffic across a group of backend servers, also known as a server farm or server pool.

Modern websites must serve high traffic of concurrent requests in millions from users or clients and return the correct text, images, video, or application data, all in a fast and reliable manner. To cost-effienctly scale this high volumne need, modern computing best practices requires adding more servers.

A Load Balancer is a device that acts as a reverse proxy and distribute network or application traffic across a number of servers. They are used to increase capacity(concurrent users) and reliability of application. It is done by redirecting requests only to server which are available(healthy). They also provide an ease in adding and removing server dynamically in a network based on demand.

For example, during Black Friday sale Amazon and other ecommerce application adds a large number of servers to their server pool, in order to serve high volume on traffic.

Load Balancing Algorithms

Round Robin

Round Robin means servers will be selected sequentially. The load balancer will select the first server on its list for the first request, then move down the list in order, starting over at the top when it reaches the end.

Least Connections

Least Connections means the load balancer will select the server with the least connections and is recommended when traffic results in longer sessions. This is a little bit costlier as load balancer has to perform an additional computation(based on some metadata) to determine which server has the least connections.

IP Hash

With the IP Hash algorithm, the load balancer will select which server to use based on a hash of the source IP of the request, such as the visitor’s IP address. This method ensures that a particular user will consistently connect to the same server.

Sticky Sessions

Some applications require that a user continues to connect to the same backend server. A IP Hash algorithm creates an affinity based on client IP information. Another way to achieve this at the web application level is through sticky sessions, where the load balancer sets a cookie and all of the requests from that session are directed to the same physical server.

For example, in a shopping cart application the items in a user’s cart might be stored on the browser session, until the user is ready to make a purchase. Changing servers in middle of a shopping session can cause performance issues or outright transaction failure. In such cases, it is essential that all requests from a client are sent to the same backend server for the duration of the session.

Health Check

Load balancers should only forward request to “healthy” backend servers. Load balancer monitors the health of all the registered backend servers, by attempting to connect to backend server using the protocol and port defined by the forwarding rule to ensure that the servers are listening. In case a server fails the health check, it is automatically remove from the active pool and requests will not be forwarded to it until it responds to the healthy check again.

Check out how Spring Boot exposes health information.